Stable Diffusion

17.12.2022 , Author: Bhadru and GPTChat , Mostly ChatGPT

###### ### 1 what are frozen text encoders

A frozen text encoder is a type of natural language processing (NLP) model that has been trained to convert text input into a numerical representation called an encoding. The encoder is "frozen" in the sense that it is not trainable, meaning that its parameters cannot be modified through backpropagation or other training techniques.

Frozen text encoders are typically used as part of a larger NLP system, where they are used to preprocess text input before it is fed into a downstream model such as a classifier or a language model. The encoder maps the input text to a fixed-length numerical vector, which can be processed more efficiently by these downstream models.

There are many different approaches to creating text encoders, and they can be trained on a variety of tasks such as language translation, sentiment analysis, or question answering. Some popular text encoder models include BERT, GPT, and Transformer.

2 so what are transformer encoder

A transformer encoder is a type of neural network architecture that is used for natural language processing (NLP) tasks such as language translation, language modeling, and text classification. It was introduced in the paper "Attention Is All You Need" by Vaswani et al. in 2017 and has since become a popular choice for many NLP tasks due to its strong performance and ability to handle long-range dependencies in text.

The transformer encoder consists of a series of stacked self-attention layers, which allow the model to attend to different parts of the input sequence in parallel. This enables the model to effectively capture long-range dependencies in the input text, as well as handle variable-length sequences.

Each self-attention layer in the transformer encoder consists of a set of parallel attention heads, which apply dot-product attention to the input sequence. The output of the self-attention layer is then passed through a fully-connected layer and a layer normalization step before being fed into the next self-attention layer.

The transformer encoder is typically used as part of a larger transformer model, which also includes a decoder component for generating output sequences. However, it can also be used as a standalone text encoder by freezing the weights of the model and using it to generate fixed-length encodings of input text

3. Shortly explain the paper Attention Is All You Need" by Vaswani et al. in 2017

"Attention Is All You Need" is a research paper published in 2017 by Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin. The paper introduces the transformer architecture, a neural network architecture for natural language processing (NLP) tasks such as language translation and language modeling.

The key contribution of the transformer architecture is the use of self-attention mechanisms to process the input sequence. Traditional NLP models such as recurrent neural networks (RNNs) rely on recurrence and sequential processing to capture dependencies between elements in the input sequence. In contrast, the transformer architecture uses self-attention mechanisms to allow the model to attend to different parts of the input sequence in parallel, enabling it to effectively capture long-range dependencies in the input.

The transformer architecture also includes a number of other innovations such as the use of multi-headed attention, the incorporation of positional encodings, and the use of residual connections and layer normalization. These design choices contribute to the model's strong performance on a variety of NLP tasks.

Since its introduction, the transformer architecture has become a widely used and influential model in the field of NLP, and has been applied to a range of tasks including language translation, language modeling, and text classification.

Self-attention mechanisms are implemented using a dot-product attention function, which calculates the attention weights between different elements in the input sequence. These attention weights are used to weight the contributions of different elements in the input when calculating the output of the self-attention layer.

The transformer architecture uses multiple self-attention layers, which are stacked on top of each other. Each self-attention layer consists of a set of parallel attention heads, which apply dot-product attention to the input sequence. The output of the self-attention layer is then passed through a fully-connected layer and a layer normalization step before being fed into the next self-attention layer.

Self-attention mechanisms have been shown to be effective at capturing long-range dependencies in the input, and are a key reason for the strong performance of the transformer architecture on a variety of NLP tasks.

4. explain diffusion models

Diffusion models are a class of statistical models that are used to describe the spread of information or influence through a network. They are commonly used to study the spread of ideas, trends, or behaviors in social networks, but can also be applied to other types of networks such as transportation networks or communication networks.

Diffusion models typically assume that the spread of information or influence through the network follows a certain set of rules or dynamics. These dynamics can be represented mathematically and used to model the spread of the information over time.

There are several different types of diffusion models, including deterministic models, probabilistic models, and agent-based models. Deterministic models describe the spread of information through the network using a set of differential equations, while probabilistic models describe the spread of information using probabilities. Agent-based models, on the other hand, simulate the spread of information through the network by tracking the actions of individual agents or nodes in the network.

Diffusion models are useful for understanding the spread of information or influence in networks and can be used to predict the likely trajectory of the spread over time. They are commonly used in fields such as social network analysis, marketing, and public health to study the spread of ideas, trends, or behaviors in networks.

Latent diffusion models are typically used to study the spread of ideas, trends, or behaviors in social networks, where the underlying factors that drive the spread of the information may not be easily observable. They can help researchers to better understand the complex dynamics of information spread in social networks and to identify the key factors that influence the spread of the information.

Latent diffusion models can be implemented using a variety of statistical techniques, such as structural equation modeling, multilevel modeling, or latent class analysis. They are commonly used in fields such as social network analysis, marketing, and public health to study the spread of ideas, trends, or behaviors in networks.

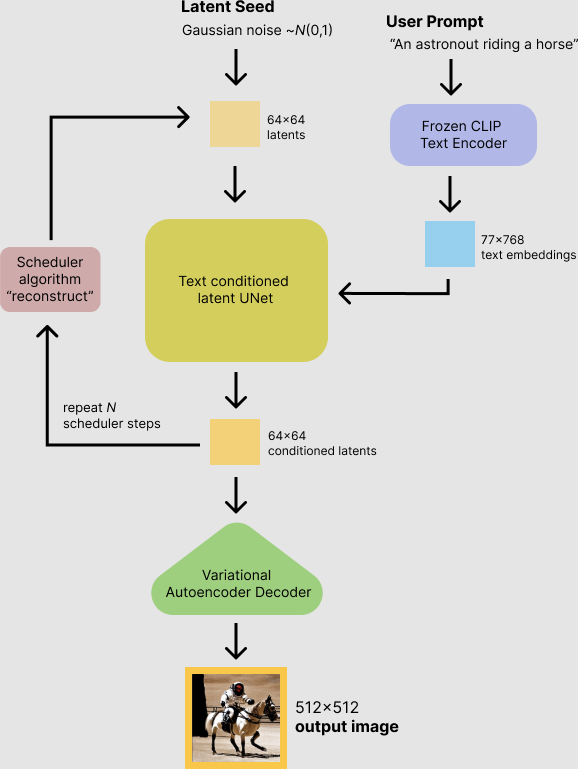

Stable Diffusion consists of 3 parts: the variational autoencoder (VAE), U-Net, and an optional text encoder.

The VAE encoder compresses the image from pixel space to a smaller dimensional latent space, capturing a more fundamental semantic meaning of the image. Gaussian noise is iteratively applied to the compressed latent representation during forward diffusion. The U-Net block, composed of a ResNet backbone, denoises the output from forward diffusion backwards to obtain latent representation.

Finally, the VAE decoder generates the final image by converting the representation back into pixel space. The denoising step can be flexibly conditioned on a string of text, an image, and other modalities. The encoded conditioning data is exposed to denoising U-Nets via a cross-attention mechanism.For conditioning on text, the fixed, pretrained CLIP ViT-L/14 text encoder is used to transform text prompts to an embedding space. Researchers point to increased computational efficiency for training and generation as an advantage of LDMs.

The Stable Diffusion model was trained on three subsets of LAION-5B: laion2B-en, laion-high-resolution, and laion-aesthetics v2 5+